YouTube’s recent newly presented guidelines on AI-generated content mark a significant shift in the content creation domain. These changes, particularly focused on videos that use AI to mimic musicians’ voices, are crucial for creators and professional content agencies.

Table of contents

YouTube’s evolving policy on AI-generated content

YouTube’s policy change comes at a time when AI-generated content, especially deepfakes, is becoming increasingly sophisticated. This technology’s ability to mimic reality raises concerns about authenticity, copyright infringement, and ethical use. For creators and agencies, these guidelines are not just rules to follow, but a framework to understand the boundaries of creative freedom and legal constraints in the age of AI.

For content creators and agencies, adapting to these guidelines means more than avoiding penalties like content take down or demonetization. It’s about strategically aligning content strategies with these new rules to maintain a favourable standing on the platform and leverage AI’s creative possibilities within the bounds of these guidelines.

YouTube’s dual approach to AI-generated content

YouTube’s approach to AI-generated content creates a distinct dichotomy: a stringent set of rules for the music industry and a more lenient framework for other content types. This bifurcation reflects YouTube’s need to balance its relationship with different content creators and industries, acknowledging the unique challenges and impacts of AI-generated content in various domains.

This dual policy approach stems from the specific risks and implications AI poses in different content categories. For instance, AI-generated music that mimics an artist’s voice has direct implications on copyright and artist identity, necessitating stricter guidelines. However, the varying degrees of stringency between the two categories create potential challenges and ambiguities, especially in terms of enforcement and compliance.

For creators and agencies, this means navigating a complex set of rules that require careful consideration of the content type and its potential implications. Understanding these distinctions is crucial for developing content strategies that align with YouTube’s policies and uphold the integrity of the platform and its users.

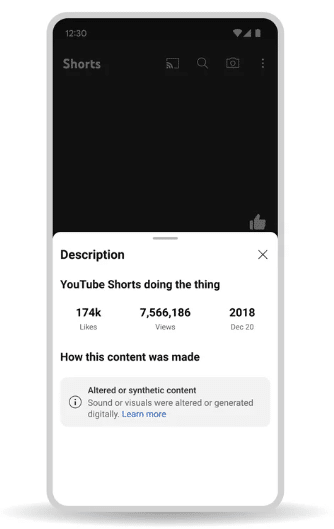

The new labelling mandate for AI-generated content

YouTube’s policy now requires creators to label AI-generated content that is “realistic.” This includes specifying where and how these labels should appear. However, the lack of a clear definition of what constitutes “realistic” content poses challenges for creators and agencies in ensuring compliance.

The ambiguity surrounding the definition of “realistic” AI-generated content means that creators and agencies must err on the side of caution and transparency. This involves adhering to the labelling requirements and understanding the broader implications of AI-generated content in terms of viewer perception and trust.

Non-compliance with these labelling requirements can lead to severe penalties, including content removal and demonetization. This underscores the importance for creators and agencies to stay informed and compliant with these guidelines, ensuring their content remains accessible and monetizable on the platform.

Content removal policies for AI-generated videos

YouTube’s content removal policies, particularly for videos simulating identifiable individuals, add another layer of complexity to content moderation. These policies consider various factors, such as whether the content is parody or satire and the public figure status of the individuals featured.

YouTube’s process of evaluating content removal requests is intricate and subjective. YouTube considers various factors, including the nature of the content and the status of the individuals depicted. This subjective nature poses challenges for creators and agencies in predicting how their content might be judged and what actions they might need to take in response to removal requests. These complexities require that creators must balance creative expression with compliance, ensuring their content does not inadvertently violate YouTube’s policies.

Navigating copyright issues and legal ambiguities

The legal landscape for AI-generated content, especially in the absence of specific laws regulating AI deepfakes, is fraught with uncertainties. YouTube’s policies might set precedents in this emerging field, but the lack of established legal frameworks poses challenges for creators and agencies in ensuring their content remains within legal boundaries.

Beyond legal compliance, there are significant ethical considerations in creating and distributing AI-generated content. Issues around content authenticity, intellectual property rights, and the potential for misuse underscore the need for creators and agencies to approach AI-generated content with a sense of responsibility and ethical consideration.

As YouTube forges its path in moderating AI-generated content, its policies could influence broader legal and ethical standards in this field. For creators, this means adhering to current guidelines and staying abreast of evolving legal and ethical discussions surrounding AI-generated content.

Specific rules for AI-generated music content

YouTube has established specific rules for AI-generated music content that mimics an artist’s unique voice. This includes intricacies related to exceptions for news reporting, analysis, or critique, and the process for music removal requests. The exceptions for certain uses of AI-generated music, such as for news reporting or critique, create a narrow space for creative exploration within these guidelines.

Balancing creativity with responsibility in the future

YouTube’s strategy on AI innovation emphasizes balancing creativity with responsibility. This involves deploying AI technology for content moderation and enforcing community guidelines effectively, while also recognizing the potential of AI to introduce novel forms of abuse and challenges. YouTube’s efforts in responsible AI innovation aim to maintain community safety and guideline compliance. For creators, aligning with these efforts means following the rules and contributing to a safe and responsible online environment.

The future of AI-generated content on YouTube holds immense potential for innovation and creativity. However, balancing content freedom with ethical considerations and community safety remains a significant challenge. As AI technologies advance, creators must navigate the fine line between leveraging these technologies for creative expression and upholding ethical standards and community safety. Staying informed about evolving guidelines and technological advancements is crucial.

The developments in AI-generated content on YouTube will significantly shape the future of content creation. For creators and agencies, embracing these changes while maintaining a focus on responsibility, compliance, and innovation will be key to success in this evolving landscape.